Compact AI Acceleration: Geniatech’s M.2 Module for Scalable Deep Learning

Compact AI Acceleration: Geniatech’s M.2 Module for Scalable Deep Learning

Blog Article

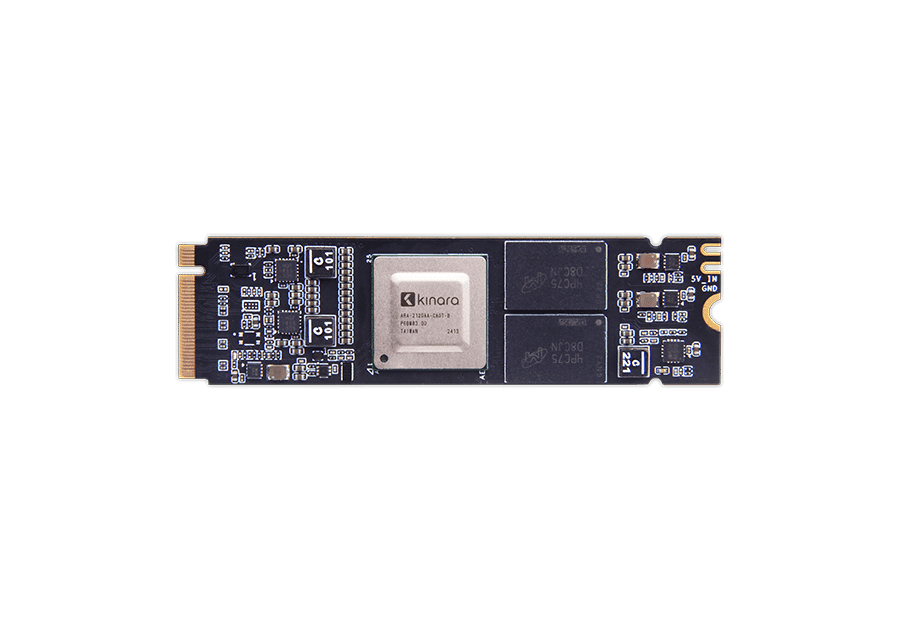

Geniatech M.2 AI Accelerator Module: Compact Power for Real-Time Edge AI

Synthetic intelligence (AI) remains to revolutionize how industries perform, specially at the edge, where quick control and real-time ideas aren't only fascinating but critical. The m.2 ai accelerator has appeared as a compact yet powerful answer for handling the needs of edge AI applications. Giving strong performance inside a small footprint, that element is rapidly operating invention in sets from intelligent towns to industrial automation.

The Significance of Real-Time Handling at the Edge

Edge AI links the gap between persons, units, and the cloud by allowing real-time data handling wherever it's most needed. Whether powering autonomous vehicles, intelligent safety cameras, or IoT detectors, decision-making at the edge must occur in microseconds. Old-fashioned research methods have confronted challenges in checking up on these demands.

Enter the M.2 AI Accelerator Module. By developing high-performance unit learning features into a compact sort factor, this computer is reshaping what real-time handling seems like. It provides the speed and efficiency organizations require without relying exclusively on cloud infrastructures that will present latency and raise costs.

What Makes the M.2 AI Accelerator Component Stand Out?

• Lightweight Design

One of many standout characteristics of this AI accelerator element is its small M.2 form factor. It matches easily in to many different stuck systems, servers, or edge units without the need for intensive hardware modifications. This makes arrangement easier and a lot more space-efficient than bigger alternatives.

• High Throughput for Device Understanding Tasks

Designed with sophisticated neural network handling functions, the module provides extraordinary throughput for tasks like picture recognition, video evaluation, and speech processing. The structure ensures easy managing of complex ML types in real-time.

• Energy Efficient

Power use is a important problem for side devices, specially those who perform in remote or power-sensitive environments. The module is improved for performance-per-watt while sustaining regular and trusted workloads, which makes it suitable for battery-operated or low-power systems.

• Flexible Applications

From healthcare and logistics to clever retail and manufacturing automation, the M.2 AI Accelerator Module is redefining possibilities across industries. Like, it forces sophisticated movie analytics for wise monitoring or enables predictive maintenance by examining warning knowledge in industrial settings.

Why Edge AI is Increasing Momentum

The rise of side AI is reinforced by growing data quantities and an increasing quantity of linked devices. In accordance with recent market numbers, there are over 14 thousand IoT products functioning internationally, several expected to surpass 25 million by 2030. With this specific change, conventional cloud-dependent AI architectures face bottlenecks like increased latency and privacy concerns.

Side AI reduces these difficulties by processing information domestically, giving near-instantaneous ideas while safeguarding person privacy. The M.2 AI Accelerator Component aligns completely with this trend, permitting corporations to harness the total potential of edge intelligence without compromising on detailed efficiency.

Important Data Showing their Impact

To understand the influence of such systems, contemplate these shows from recent business studies:

• Development in Side AI Market: The world wide edge AI equipment industry is believed to develop at a compound annual growth rate (CAGR) exceeding 20% by 2028. Devices such as the M.2 AI Accelerator Module are essential for operating this growth.

• Efficiency Standards: Labs testing AI accelerator segments in real-world circumstances have demonstrated up to 40% development in real-time inferencing workloads in comparison to mainstream edge processors.

• Use Across Industries: Around 50% of enterprises deploying IoT machines are anticipated to include edge AI applications by 2025 to boost functional efficiency.

With such numbers underscoring its relevance, the M.2 AI Accelerator Element is apparently not really a instrument but a game-changer in the shift to smarter, quicker, and more scalable side AI solutions.

Pioneering AI at the Edge

The M.2 AI Accelerator Element presents more than simply still another little bit of hardware; it's an enabler of next-gen innovation. Businesses adopting that computer may stay in front of the contour in deploying agile, real-time AI systems fully enhanced for edge environments. Small however powerful, it's the perfect encapsulation of development in the AI revolution.

From its power to process device learning types on the fly to its unparalleled freedom and power effectiveness, this component is proving that edge AI is not a remote dream. It's occurring today, and with instruments such as this, it's easier than ever to create better, faster AI closer to where the action happens. Report this page